699

General 699 pages from the archived wiki.

- 699 Software

- 699util

- 699util User Scripts

- Alerts

- Archived SAGE Versions

- BASIC Script Tools

- Bugzilla

- c699util Developer's Guide

- c699util User's Guide

- Database Patch Files

- Gnuplot

- MOMA GSE Computer Info

- Introduction to the 699 Python Library

- ISAMS

- LaTeX

- Lxml

- Melissa Trainer

- Mike Wong

- Old Main Page

- Python Performance

- Python Tool Installation

- Setup for Qt Development

- Setup Notes

- Subversion

- Supplementary Files and Telemetry File Preprocessing

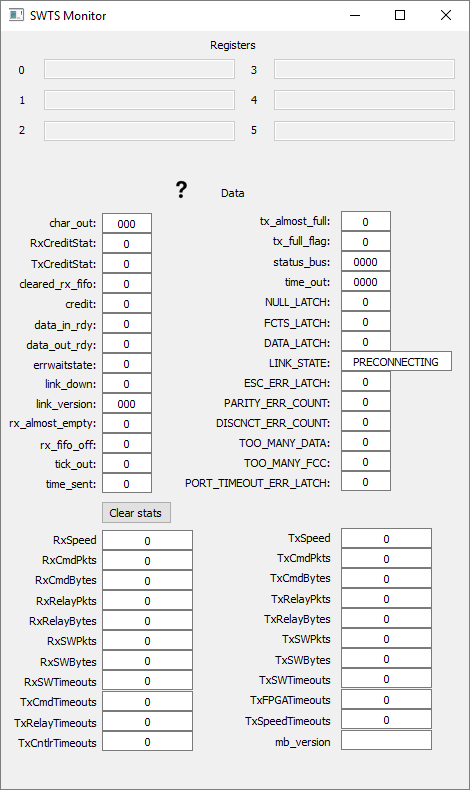

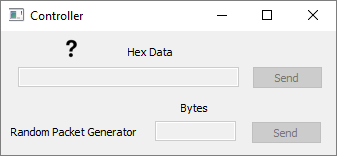

- SWTS ENET User Guide

- Telemetry Database Files

- Telemetry Metadata File

- Time Synchronization Notes

- Tm2igor Quickstart

- TunnelManager

- Updating mine699

699 Software

General Information

Update Mailing List

You can sign up for the SAM Software Mailing list at https://lists.nasa.gov/mailman/listinfo/sam-software-release. Doing so will keep you notified of any software updates for XINA, SAM Data View, and Python Scripts!

Bug Reporting

Report all bugs to our Bugzilla server at https://wush.net/bugzilla/sw699/. Use the "Open a New Account" button to set up a new account. You can use this for both bug reports and feature requests.

Installation

These tools will install SAMDataview, Python 2.7, and SAM python utilities:

Mac OS X

Download and install the following files in order:

- Download the Mac OS X Python Installer from the Python.org homepage. Open the disk image and run the enclosed installer package. You will (probably) never have to update Python again, as far as SAM software is concerned. You can ignore this step when updating in the future.

- Download the source code archives of the most current distribution of 699util. Instructions for installing Python modules from source code archives can be found on that page, as well.

- Download the Mac Installer from the SAM Data View page. Open the disk image and run the enclosed installer package.

- Head to the XINA Client Install Guide page and follow the instructions to install the XINA client.

System Requirements

SAM Data View for Mac was built for Mac OS X 10.6.8, 64-bit. In our limited experience with OS X 10.7 ("Lion"), SAM Data View installs and runs with no problems, though we are not yet certain that it will work on all systems with 10.7. We do not expect a high demand for a 32-bit Mac Version of SAM Data View, but if there is, we will build a 32-bit installer. The Python tools and XINA will work on any OS (provided Python and Java, respectively, are installed).

Windows

Download and install the following files in order:

- Download the appropriate installer from the Python.org download page: Windows 32-bit or Windows 64-bit.

- Download the Windows installer from the most current distribution of 699util. Windows 7 and Vista users will need to right-click on the flie after it has downloaded and choose "Install as Administrator..."

- Head to the XINA Client Install Guide page and follow the instructions to install the XINA client.

- Download the current Windows Installer for SAM Data View (note: requires reboot after installation).

Individual Software Pages

- TunnelManager

- SAM Data View

- 699util (Python Tools)

- SAM PDS Procedure

- IGOR Software

- Developer Computer Setup

- Setup Notes (for other computers)

- Notes on Python Performance (Developers only)

- Personal User Pages:

- Melissa Trainer: IGOR scripts for atmospheric analysis

- Mike Wong: IGOR scripts for atmospheric analysis

Miscellaneous

Mac Gnuplot Installation

Short story: for legal reasons, Apple does not ship Gnu Readline library by default. The replacement library does not have all the functionality needed for gnuplot, and this often causes errors.

Download the latest Gnuplot source: http://sourceforge.net/projects/gnuplot/files/.

- Download the latest version of Gnu Readline: ftp://ftp.cwru.edu/pub/bash/readline-6.2.tar.gz.

- Untar it,

cdto the new folder, and execute./configure --prefix=/usr, thenmake everything, thensudo make install. - Then cd into the gnuplot source folder. Use

./configure,make, andsudo make install.

Mac lxml Installation

There are two big obstacles to installing lxml on a Mac. First, the Mac

standard libxml2 is often woefully out of date (at least up to 10.7;

10.8 is far better.) Therefore, in order to get a good version of

libxml2, the creators of lxml built in a way to build a static library

of libxml2 into your compilation. Unfortunately, the latest version of

libxml2 at this time of writing (2.9.0) has a bug, preventing usual

tools like "pip" from working. (If 2.9.1 is ever released, then

STATIC_DEPS=true pip install lxml will work. If you need sudo,

remember to put it between sudo and pip).

- Download The latest version of lxml from PyPi: http://pypi.python.org/pypi/lxml

- Untar it,

cdto the new folder, and executepython setup.py build --static-deps --libxml2-version=2.8.0. You may needsudopermissions, depending on your setup.

699util

This page presents a general overview of working with Python for SAM data processing tasks.

Note for SAM users: Distribution through .dmg file is no longer recommended. See PDL_Setup

Note for MOMA users: MOMA Python packages can be found at MOMA_Python_Packages.

Installation Instructions

For installation instructions, go to the Python Tool Installation page.

Bug Reports

Please do not report bugs on this wiki. Instead, use the Bugzilla server we have set up for bug tracking and reporting. Once you have set up an account, you may file a bug for the python software at the page for Command-line Utils.

List of Scripts

| Script Name | Description |

|---|---|

| checksum.py | Calculate the Fletcher's checksum of input or a list of existing files. |

| datavol.py | Estimate the data volumen of a telemetry file. |

| faradaycup.py | Correlate faraday cup scans to faraday cup currents. |

| feiget.py | Download data from FEI. |

| fei2tm.py | Convert EDRs or DPs to a telemetry file. |

| gcms.py | Print a tab-delimited table of GCMS data organized by both mass and time. |

| gcsci.py | Print GC TCD data in a tab-delimited table. |

| gcsciplot.py | Plot GC TCD data, with or without TCD offset correction. |

| getcsvreports | Script for PDL to download MSL engineering reports and sort their contents by sol. |

| hkdump.py | Print the values in a SAM type-10 housekeeping packet. |

| hot.py | Create a tab-delimited CSV file showing the cumulative number of tics the heaters have been on. |

| htrduty.py | Plot the duty cycle and temperature of a given SAM heater. |

| listfei.py | List and sort the DPs or EDRs in the current working directory. |

| massrange.py | Plot the masses and bands being scanned at a given time. |

| movefei.py | Move files downloaded from FEI into the appropriate TID folder. |

| ontime.py | Calculate how long a given component was "on" (e.g., how long a valve open, or how long an HK value was above a certain threshold) |

| phd.py | Plot the pulse-height distribution at a given marker ID. |

| powercut.py | Cut a power profile into two independent power profiles. |

| ql_ega.py | Generate the plots for a quicklook. |

| runall.py | Run a command or python code in every telemetry directory. |

| sclk2scet.py | Convert an SCLK timestamp to SCET time. |

| t0.py | Display the start time of a telemetry file in various formats. |

| timestamp.py | Print the start time of the file in a human-readable format. |

| tm2rdr.py | Create a Reduced Data Record from a telemetry file. |

| tmdb.py | A method used in backtics to get the axis name for a database entry. |

| tmdiff.py | A diff-like program for telemetry files. |

| tmdump.py | Produce a hex dump of telemetry file, packet by packet. |

| tmexcerpt.py | Create a telemetry file containing only a certain marker or packet type. |

| tmfields.py | Print a tab-delimited table of housekeeping data. |

| tmglue.py | Concatenate two telemetry files together. |

| tmkeys.py | Search for and display the telemetry database. |

| tmmarker.py | Print a list of the markers and marker IDs in the telemetry file. |

| tmmsg.py | Print the contents of the message log packets. |

| tmplot.py | Plot housekeeping data. |

| tmsanitize.py | Remove malformed data from a packet. |

| tmscan.py | Plot a DAC scan. |

| tmsequence.py | Print a report detailing whether packet sequence numbers increase normally. |

| tmstat.py | Print the statistics of a given HK value (min, median, maximum, mean). |

| tmsummary.py | Print a summary of each packet in a telemetry file. |

| triplets.py | Print a tab-delimited table of GCMS data organized by time. |

| valvestat.py | Print the valve operations (like the tmvalves viewer in SAM Data View). |

Version History

1.00.00

Source: tmutil-1.00.00.tar.gz

Release Date: 2011-11-18

- Python modules and scripts for processing telemetry data.

1.00.01

Source: tmutil-1.00.01.tar.gz

Release Date: 2011-11-22

- Updated tmenv to look for $HOME/SAM/gse/ in addition to the original $HOME/gse/ setup.

- Fixed a bug which caused the GC-specific sequence number of GC HK Packets to overwrite the packet sequence number.

1.00.02

Source: tmutil-1.00.02.tar.gz

Release Date: 2011-11-30

- Fixed bug which caused tmplot.py to crash when data was missing.

1.00.03

Source: tmutil-1.00.03.tar.gz

Release Date: 2012-12-02

- Added checksum.py script for calculating checksums of files.

- Fixed a bug which calculated the CWD for find_tmfile at the start of execution, not dynamically as the function was called.

- Made relative time default for triplets.py.

- Added regular expression-type searching for tmmsg.py, and grep-like -v (invert) option.

- Added plotref and limref scripts for LADEE EBT processing.

- Numerous bugfixes for problems observed in first round of regression testing.

1.00.04

Source: tmutil-1.00.04.tar.gz

Release Date: 2011-12-15

- Overhauled dead time correction.

- Fixed bug with showing marker IDs in tmplot.

- Fixed boot time correction for LADEE/MAVEN to avoid problems caused by race condition with time sync.

1.00.05

Source: tmutil-1.00.05.tar.gz

Release Date: 2012-01-06

- Simplified options in tmfields.py.

- Added limref

- Added plotref

- Added rf_subpacket_cal.py

- Added subpacket_timing.py

- Added on-line help for almost every script (i.e.,

-hoption)

1.00.06

Source: tmutil-1.00.06.tar.gz

Release Date: 2012-01-09

- Added background subtraction functions.

- Fixed infinite loop bug in tmread.util.step_pair.

1.00.07

Source: (missing)

Release Date: 2012-01-15

- Added newtid.py

- Added

godatacommand for the shell. - Changed sorting behavior in tmdir to use date rather than TID.

1.01.00

Source: tmutil-1.01.00.tar.gz

Release Date: 2012-03-13

- Added support for MAVEN NGMS data.

- Added lib699 package for functions not specific to telemetry.

- Added checksum.py to default distribution.

- Added massrange.py.

- Added ontime.py.

- Added t0.py.

- Added tmstat.py.

- Added tmsummary.py.

1.01.01

Source: tmutil-1.01.01.tar.gz

Release Date: 2012-03-14

- Fixed grievous errors that prevented installation.

1.02.00

Source: 699util-1.02.00.tar.gz

Revision: 66

Release Date: 2012-04-05

- Bundled TMUtil and SamUtil into 699util.

- Added support for SVN operations scripts.

- Improvements to lib699.

- Workaround to total_ordering bug in Python 2.7.1.

1.02.01

Source: 699util-1.02.01.tar.gz

Revision: 69

Release Date: 2012-05-10

- Fixed bug with setting dac mode in triplets.py.

1.02.02

Source: 699util-1.02.02.tar.gz

Revision: 88

Release Date: 2012-05-14

- Backend changes to Mission and QMS Science Packet classes.

- Incorporation of regression testing-framework (not distributed).

1.03.00

Source: 699util-1.03.00.tar.gz

Revision: 118

Release Date: 2012-05-24

- Incorporated support for time correction as a configurable file. Abandoned old concept of "offset correction."

- Added scripts for generating time configuration files from MSL-generated SCLK-SCET files.

1.03.01

Source: 699util-1.03.01.tar.gz

Revision: 150

Release Date: 2012-06-19

- Added gcsciplot.py

- Added override of Gnuplot.py's insistence on using "aqua" as the default terminal for Macs.

- Backend restructuring of feiutil.

- Added highlighting support to lib699.ttyutil and tmmsg.

- Added preliminary support for SAM Testbed.

1.03.02

Source: 699util-1.03.02.tar.gz

Revision: 167

Release Date: 2012-07-05

- Now tolerates missing SCLK/SCET file (issues a warning).

- Updates to tm2rdr.py and pds3 package.

1.04.00

Source: 699util-1.04.00.tar.gz

Revision: 326

Release Date: 2012-10-06

- Added lots of scripts, including lingering scripts that had not yet been ported to 699util and new SAM ops software

- Added lib699.collections

- Fixed svnutil performance bugs

- A little bit of Python 3 support (not fully implemented)

- tmenv moved into tmread, now uses .699config.INI file

- Added XINA SVN-post-commit hooks

1.05.00

Source: 699util-1.05.00.tar.gz (zip version: 699util-1.05.00.zip)

Windows Installer: 699util-1.05.00.macosx-10.6-intel.exe

Revision: 702

Release Date: 2013-04-17

- Too many changes to list. Incorporated every change to software since last October.

1.05.01

Source: 699util-1.05.01.tar.gz (zip version: 699util-1.05.01.zip)

Windows Installer: 699util-1.05.01.exe

Revision: 797

Release Date: 2013-05-31

- Fixed a bug with dead-time correction that causes incorrect data to be printed.

- Packet resequencing now based on packet "creation time" rather than packet timestamp.

- Fixed bug in tmfields which caused program to crash when a telemetry file did not have a defined TID.

- Many minor backend changes and bug fixes.

2.00.00

DMG (Mac) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00.dmg

TAR Archive (Mac/Linux) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00-installer.tar.gz

Revision: 1242

Release Date: 2013-09-05

- Now supports Python 3 instead of Python 2

- Improvements to packet parsing behavior

- Representation of time as discrete units

- Reorganization of scripts

- Streamlining of gnuplot interface

2.00.01

DMG (Mac) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00.01.dmg

TAR Archive (Mac/Linux) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00.01-installer.tar.gz

Revision: 1249

Release Date: 2013-09-12

- Added tm2igor.pl and igormeta.py.

- Fixed bug with ontime.py; now correctly tracks the last stretch for which a value is considered "on"

- Added samvtool alias to shell configuration script.

2.00.02

DMG (Mac) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00.02.dmg

TAR Archive (Mac/Linux) Installation Package: https://s3.amazonaws.com/699_bin/699util/699util-2.00.02-installer.tar.gz

Revision: 1256

Release Date: 2013-09-13

- Added smscuplog.py.

- Full working version of nms2pds.py.

- Added nms_assemble.py.

Workaround (From Mike Wong) If

installation fails with an error of

AttributeError: \_convert while building the enum34 package, try:

Delete the tarfile enum34-1.0.tar.gz that came in the 699util*dmg disk image.

Replace it with a newer tarfile from a link such as pypi.python.org/pypi/enum34/1.1.6.

Edit the file requirements.txt, replacing "enum34==1.0" with "enum34>=1.1.6". Then try the install script again.

699util User Scripts

This page has a list of user-written scripts that aren't included in production versions of 699util. They are not guaranteed to work as the libraries get updated.

- http://s3.amazonaws.com/699_bin/userscripts/addnewfm

- http://s3.amazonaws.com/699_bin/userscripts/addtid.sh

- http://s3.amazonaws.com/699_bin/userscripts/allrev_co.sh

- http://s3.amazonaws.com/699_bin/userscripts/asist2tm.py

- http://s3.amazonaws.com/699_bin/userscripts/basicdata.py

- http://s3.amazonaws.com/699_bin/userscripts/checkout_tid.py

- http://s3.amazonaws.com/699_bin/userscripts/dacInfo.py

- http://s3.amazonaws.com/699_bin/userscripts/dacStat.py

- http://s3.amazonaws.com/699_bin/userscripts/datavol.py

- http://s3.amazonaws.com/699_bin/userscripts/deliver2sol

- http://s3.amazonaws.com/699_bin/userscripts/evrcut

- http://s3.amazonaws.com/699_bin/userscripts/fake_asist_server.py

- http://s3.amazonaws.com/699_bin/userscripts/genexpseq.pl

- http://s3.amazonaws.com/699_bin/userscripts/genvlvop.pl

- http://s3.amazonaws.com/699_bin/userscripts/hkdump.py

- http://s3.amazonaws.com/699_bin/userscripts/htr_table_dump.py

- http://s3.amazonaws.com/699_bin/userscripts/ladeecal.py

- http://s3.amazonaws.com/699_bin/userscripts/ladlib_compare.pl

- http://s3.amazonaws.com/699_bin/userscripts/ladlib_insert.pl

- http://s3.amazonaws.com/699_bin/userscripts/m50.py

- http://s3.amazonaws.com/699_bin/userscripts/m50limits.py

- http://s3.amazonaws.com/699_bin/userscripts/mavensoc2tm.py

- http://s3.amazonaws.com/699_bin/userscripts/mkdir_tid.py

- http://s3.amazonaws.com/699_bin/userscripts/mkj2000sclk.py

- http://s3.amazonaws.com/699_bin/userscripts/mkladmavsclk.py

- http://s3.amazonaws.com/699_bin/userscripts/mlfilter.sh

- http://s3.amazonaws.com/699_bin/userscripts/monchk.py

- http://s3.amazonaws.com/699_bin/userscripts/ngims_data_mining_electrodes.sh

- http://s3.amazonaws.com/699_bin/userscripts/ngims_data_mining_m50.sh

- http://s3.amazonaws.com/699_bin/userscripts/ngimsdemomine.py

- http://s3.amazonaws.com/699_bin/userscripts/penergy

- http://s3.amazonaws.com/699_bin/userscripts/pkim2limits.py

- http://s3.amazonaws.com/699_bin/userscripts/power_expand.py

- http://s3.amazonaws.com/699_bin/userscripts/pwmdump.py

- http://s3.amazonaws.com/699_bin/userscripts/ramobs.py

- http://s3.amazonaws.com/699_bin/userscripts/rf_subpacket_cal.py

- http://s3.amazonaws.com/699_bin/userscripts/samlib_compare.pl

- http://s3.amazonaws.com/699_bin/userscripts/samlib_insert.pl

- http://s3.amazonaws.com/699_bin/userscripts/scanavg.py

- http://s3.amazonaws.com/699_bin/userscripts/scet2unix.py

- http://s3.amazonaws.com/699_bin/userscripts/smshk.c

- http://s3.amazonaws.com/699_bin/userscripts/stripbas.pl

- http://s3.amazonaws.com/699_bin/userscripts/subpacket_timing.py

- http://s3.amazonaws.com/699_bin/userscripts/svnutil.py

- http://s3.amazonaws.com/699_bin/userscripts/tb_masstbl.py

- http://s3.amazonaws.com/699_bin/userscripts/tcctid.py

- http://s3.amazonaws.com/699_bin/userscripts/thermcut

- http://s3.amazonaws.com/699_bin/userscripts/timestamp.py

- http://s3.amazonaws.com/699_bin/userscripts/tmdb.py

- http://s3.amazonaws.com/699_bin/userscripts/tmenv2ini.py

- http://s3.amazonaws.com/699_bin/userscripts/tmglue.py

- http://s3.amazonaws.com/699_bin/userscripts/untunnel

- http://s3.amazonaws.com/699_bin/userscripts/xinahooktestsetup.sh

Alerts

Alerts are messages generated during mining that often serve as a notice or warning that a certain threshold or event occurred. Each alert contains a severity, a time, and a specific message.

MOMA Alerts

General Error Check

- Alert ID: 1

- Severity: Notice

- Description: Grep message log for "Error"

MAIF APV Comm

- Alert ID: 2

- Severity: Warning

- Description: Grep message log for "ldi: error"

Spacewire Comm Error

- Alert ID: 3

- Severity: Warning/Notice

- Description: Spacewire Parity (HK 522) > 0 or Spacewire Disconnect (HK 523) > 0 after 30sec

MOMA Safe Event

- Alert ID: 4

- Severity: Notice

- Description: HK 507 MAX, MOMA_STATUS_SAFE > 0 at any time during a TID

WRP Too Fast

- Alert ID: 5

- Severity: Notice

- Description: PWM Speed - HK 832 MAX > 103000

WRP Failed To Start

- Alert ID: 6

- Severity: Warning

- Description: PWM Speed - HK 832, MAX > 1000 and < 99000 RPM

WRP Pressure Spike

- Alert ID: 7

- Severity: Warning

- Description: HK 830, MOT_SUP_IMON, MAX > 1.1A

Dark Counts

- Alert ID: 8

- Severity: Warning

- Description: HK 252 SCAN_TELEM_DAC_ID = 20000 AND HK 253 SCAN_TELEM_DAC_VAL > 5

Laser Burst Energy Variation

- Alert ID: 9

- Severity: Info

- Description: Std. Dev. of burst pulse energy is > 5.0

Laser Pulses Mismatch

- Alert ID: 10

- Severity: Notice

- Description: Main Pulses != # of Pulses in Burst

Unknown SEB sequences

- Alert ID: 11

- Severity: Notice

- Description: Scan type is LDI or EI, and SEB packet sequence count == 0

Filament VMON Pegged

- Alert ID: 12

- Severity: Warning

- Description: Anytime Filament VMON HK is > 4.9

Expected Pulse Count Mismatch

- Alert ID: 13

- Severity: Warning

- Description: HKID 1050 (PLS_SUBPKT_CNT) != (pkt.length() - 4 / 16)

Gap in Scans

- Alert ID: 14

- Severity: Warning

- Description: Bin Delta between Science packets is > 100

Old Packet Format

- Alert ID: 15

- Severity: Info

- Description: Scan Packet Version Number != 2 (c_scan.science_data_pkt_version())

Missing Scan Status Packets

- Alert ID: 16

- Severity: Warning

- Description: Number of Scan Status Packets != Number of Scans in Summed Packet | Disable for Dark Counts

Dropped Packets

- Alert ID: 17

- Severity: Warning

- Description: Dropped packets are detected by gaps in the packets' sequence numbers.

Both EMs On

- Alert ID: 18

- Severity: Warning

- Description: Both Electron Multipliers are on at the same time

Sub-system Communication Errors

- Alert ID: 19

- Severity: Warning

- Description: Communication errors between any of the sub-systems (MAIF, SEB, GC, LASER) as reported by the CDH/FSW.

WRP Instantaneous High Current

- Alert ID: 20

- Severity: Warning

- Description: The instantaneous WRP Current exceeded a threshold. A Problem Report should be opened if it happened on the Flight Model.

| Mass Flow | WRP Back Pressure | Instant WRP Current |

|---|---|---|

| MP_PRESS < 0.5 | 4 Torr | 0.55 A |

| MP_PRESS < 0.5 | 6 Torr | 0.65 A |

| MP_PRESS < 0.5 | 8 Torr | 0.75 A |

| MP_PRESS > 0.5 | 4 Torr | 0.70 A |

| MP_PRESS > 0.5 | 6 Torr | 0.80 A |

| MP_PRESS > 0.5 | 8 Torr | 0.90 A |

WRP 1-Min Average High Current

- Alert ID: 21

- Severity: Warning

- Description: The 1-Min Avg WRP Current exceeded a threshold. A Problem Report should be submitted if it happened on the Flight Model.

| Mass Flow | WRP Back Pressure | 1-Min Avg WRP Current |

|---|---|---|

| MP_PRESS < 0.5 | 4 Torr | 0.45 A |

| MP_PRESS < 0.5 | 6 Torr | 0.55 A |

| MP_PRESS < 0.5 | 8 Torr | 0.65 A |

| MP_PRESS > 0.5 | 4 Torr | 0.60 A |

| MP_PRESS > 0.5 | 6 Torr | 0.70 A |

| MP_PRESS > 0.5 | 8 Torr | 0.80 A |

Archived SAGE Versions

04/18/18:

Software: SAGE1.8.3.pxp

07/18/14:

Software: SAGE1.7.pxp

11/13/13:

Software: SAGE1.6.1.pxp.zip

02/19/13:

Software: SAGE1.5.2.pxp

01/29/13:

Software: SAGE1.5.1.pxp

11/30/12:

Software: SAGE1.4.pxp

10/19/12:

Software: SAGE1.3.pxp

9/26/12:

- Fixes the peak selection cursor bug

9/24/12:

9/20/12:

Presented at the GCMS focus group meeting: SAGE_update_09_20_12.pptx

9/7/12:

8/24/12

SAM Igor tool v1.0: SAM1.0.pxp

Documentation for SAM Igor tool v1.0: SAM1.0Help.pdf

SAM IGOR previews:

BASIC Script Tools

Basic Editor

Recommended: Sublime Text

Sublime Text is a cross-platform editor, which has special features that are useful for BASIC editing. Namely, if the script and samlib.bas are open in separate tabs in the same window, Option/Command/Down Arrow will jump to the definition in samlib.bas and Ctrl - (control hyphen) will jump back to the call in the main script.

To configure syntax highlighting, install the Basic package:

Mac OS X Installation

- Press Option key and select Go/Library from Finder menu

- Navigate to Application Support/Sublime Text 3/Packages/User

- Drag "Basic" folder into this directory

- Select from language menu in lower right of Sublime window for the

relevant mission:

- User/SAM Basic

- User/MAVEN Basic

Windows Installation

- Download either the 32 or the 64 bit Windows version

- Run the executable file to install Sublime

- Relocate the contents of the "Basic" folder into: 'C:\Users\UserName\AppData\Roaming\Sublime Text 2\Packages\User'

- From the drop down menus: View>Syntax>User> SAM or MAVEN

Syntax Checker (basicyy)

Any modifications to Basic scripts should be validated using command line tool, basicyy.

Mac OS X Installation

Tested on Mac OS 10.6, 10.7, and 10.8

- Download the app package: basicyy.app package:

- Move basicyy.app into your /Applications directory

- Edit the .bash_profile file in your home directory to add a new alias:

alias basicyy="/Applications/basicyy.app/Contents/MacOS/basicyy --sam"

Omit the --sam flag if you write scripts for other missions.

In lieu of a mission flag, you may set the DEFAULT_MISSION environmental variable.

- Edit the .699config.INI file in the relevant mission section to have a script_lib_dir or script_lib_file keyword. For example:

script_lib_dir = /Users/yourname/samgse/fswTables_FLIGHT

script_lib_file = /Users/yourname/mavengse/fswTables/mavlib.940

The default is to look for the Basic library in the ScriptsCDH directory inside the gse path defined in .699config.INI.

Bugzilla

Bugzilla is the server software that the 699 team uses to help with managing our software development. It is used by both developers and users to submit bugs and enhancement/feature requests. Our server is currently being maintained by Wushnet and can be accessed here.

Creating An Account

In order to submit bug tickets or feature requests, you must first create an account. Use the "Open a New Account" button and follow the required steps.

c699util Developer's Guide

If you have not already done so, please read the c699util User's Guide to familiarize yourself with c699util and its capabilities.

This article demonstrates how to add features to c699util and describes parts of the c699util build, test, and release process. I found it challenging to create c699util due to the Qt4, 699 C++ code, SWIG, and qmake learning curves, and so I hope to ease that learning process for others. I assume that readers are familiar with Qt4 and the 699 C++ code.

SWIG

SWIG (Simplified Wrapper Interface Generator) is the program used to add a Python interface (i.e., c699util) to the 699 C++ code. As of this writing, c699util is created using SWIG 3.0.8.

Let's assume that we have code in files called example.cpp and example.hpp, and that we want to use SWIG to make this code available in Python. Our next step would be to create an interface file called example.i, and then put the declarations of the code that we want to expose to Python in that file; this is mostly just copying and pasting the declarations in example.hpp. We could then run SWIG as follows:

swig -Wall -c++ -python example.i

and SWIG would generate two files: example_wrap.cxx and example.py. example_wrap.cxx now needs to be compiled into a shared object library, and example.py will look for that shared object library when it is imported. This means that example.py is useless until example_wrap.cxx is built.

We would then build example_wrap.cxx as a dynamic library and name the resulting file _example.so (SWIG expects this naming convention -- an underscore followed by the module name. Also, there's nothing wrong with renaming a dynamic library file. These file types have no strictly enforced file extension.).

At this point, we could open up our Python interpreter and type "import example". example.py would be imported, and it would then load _example.so. example.*pp now has a Python interface.

A key takeaway from this description is that "running SWIG" successfully meant generating two files -- example.py and _example.so. Together, these two files are our new Python module.

Of course, the devil is in the details, and it's easy to make mistakes in this process. So, developers who modify c699util (or create something similar to it) are encouraged to start by making sure that they can follow this tutorial (I had to get rid of the -arch i386 flag when I went through the article), which can be thought of as the "Hello, World!" of SWIG. As with any complicated software development, a smart approach is to start with something simple that works well (such as the example in this article) and then to make small changes/enhancements to it, ensuring that the small changes work as expected.

I found the SWIG documentation and mailing list to be very helpful, particularly:

While those last two links lead to very long articles, I found it worthwhile to grit my teeth and read them in their entireties. If you find yourself maintaining c699util, you should probably do the same. However, if you're just going to make a small change to c699util, you can probably get away with just "cookbooking" off of the simpler examples in the first two links as well as existing c699util code. (If you do so, be sure to update test_c699util.py! This will be described below.)

Additionally, my personal notes on SWIG and c699util have been shared with the MOMA software team. Contact a team member if you need access.

How c699util and the 699 C++ code work together

c699util is defined in three files: c699util.cpp, c699util.hpp, and c699util.i. c699util.cpp and c699util.hpp (occasionally referred to as c699util.*pp) can be thought of as the "bridge" between the 699 C++ code and the Python scripts that want to use the features defined in the 699 C++ code. As such, c699util.*pp are responsible for hiding 699 C++ implementation details from those Python scripts. c699util.i contains the routines from c699util.*pp that we want exposed to Python; again, this is mainly done by copying declarations from c699util.hpp into c699util.i.

The general approach taken by c699util.*pp is to define a class that Python scripts expect, such as TMFile, and within that class, use 699 C++ objects to implement useful methods. For example:

class TMFile

{

public:

// todo - I'm forgetting to use const in these methods

TMFile(std::string filename);

std::string filename();

std::string absolute_directory();

std::string directory_string();

std::string get_mcal_filename();

long long int file_size();

int tid();

int length();

bool exists();

TMPacket get_pkt(int index);

double t0_in_unix_time() const;

private:

QString filename_;

TmMeta tmMeta_;

MomGse::MomTelemetryFilePtr momaTlmFilePtr_;

MomGse::MarkerCache markerCache_;

MomGse::MomaTimestampResolver& timestampResolver_;

};

As you might expect, TMFile generates packet objects via momaTlmFilePtr_, and determines their marker and timestamp properties by using markerCache_ and timestampResolver_. TMPacket, ScienceDataCacheWrapper, and PythonStyleMomaScan follow the same design pattern.

How SWIG and qmake work together

I don't want to describe our entire build system, but there are a few c699util.pro details worth understanding:

macx {

INCLUDEPATH += /Library/Frameworks/Python.framework/Versions/3.4/include/python3.4m

LIBS += /Library/Frameworks/Python.framework/Versions/3.4/lib/libpython3.4.dylib

INCLUDEPATH += /Library/Frameworks/QtCore.framework/Versions/4/Headers

}

linux-g++ {

# When Python3 is installed on wherever, it should be installed with

# "./configure --enable-shared". This is necessary so that the library files

# generated contain position independent (-fPIC) code.

INCLUDEPATH += /usr/local/include/python3.4m

LIBS += /usr/local/lib/python3.4/config-3.4m/libpython3.4m.a

}

This section is the most likely to cause issues. Python can be installed in a few different ways, and in some of those ways these locations may not be valid. However, if developers install Python 3.4.3 from the Python website , then there shouldn't be any problems. Don't worry about the linux-g++ section for now. It's only for the CentOS virtual machine which is used to build c699util for mine699.

system(swig -Wall -c++ -python c699util.i)

Running qmake on the c699util.pro file invokes SWIG.

macx {

QMAKE_LFLAGS_PLUGIN -= -dynamiclib

QMAKE_LFLAGS_PLUGIN += -bundle

}

linux-g++ {

QMAKE_LFLAGS_PLUGIN += -fPIC

}

When linking on OSX, replace the -dynamiclib flag with the -bundle flag, ensuring that c699util is in the Mach-O file format. I don't remember why this is necessary. When linking on the CentOS VM, produce position-independent code.

# Bundles have no strictly enforced file extension, but swig expects the

# libraries it uses to be of the form _<module name>{=html}.so , so rename the output

# file. (Don't forget the leading underscore. It's a common pitfall.)

macx {

QMAKE_POST_LINK = "mv libc699util.dylib _c699util.so"

}

linux-g++ {

QMAKE_POST_LINK = "mv libc699util.so _c699util.so"

}

That comment should sufficiently explain this part of c699util.pro.

How to add a feature to c699util

Getting c699util Source Code

c699util is located in svn://repos699.gsfc.nasa.gov/cppcode/qt4/trunk/c699util.

Setting up test_c699util.py

c699util/test_c699util.py is a fully automated test of c699util. I've done my best to ensure that every c699util feature is thoroughly tested inside of test_c699util.py.

So, if you find yourself modifying c699util, ensure that test_c699util.py remains a thorough test of c699util by adding good tests to it! This test suite runs on both OSX and CentOS and has saved us a lot of trouble by finding subtle bugs before our users could be affected by them. It should be run after every new feature is added to ensure that nothing else has broken (it runs in less than 60 seconds). Now, to set up your environment to run test_c699util.py, do the following:

If you have not done so, follow the instructions here to get MOMA Data View and check out MOMA data.

Now, if you have not already done so, tunnel into MOMAIOC and then open up a new tab. In this new tab, type

cd ~

svn co svn://localhost:6994/momadata/test_data ~/momadata/test_data

Then, open up any TID inside of test_data using MOMA Data View. This should add a MOMA_TEST_DATA group to your ~/.699config.INI file. Once you've done this, close MOMA Data View and open up ~/.699config.INI in a text editor. Modify this line:

[MOMA_TEST_DATA]

<other lines>

tm_database=/Users/mpallone/momagse/TMDef/MOM_TM_Database.txt

by putting "Test_" in front of MOM_TM_Database.txt. It should now look like:

[MOMA_TEST_DATA]

<other lines>

tm_database=/Users/mpallone/momagse/TMDef/Test_MOM_TM_Database.txt

This file is a copy of MOM_TM_Database.txt which has been frozen just for the sake of test_c699util.py. This way, future conversion changes to the real database won't break test_c699util.py.

Next, ensure that tmread is installed on your system.

At this point, you should have the necessary test_c699util.py dependencies. Building and running test_c699util.py is described in the next section.

Building c699util

Whenever I release a new version of c699util, I run the following commands:

make clean

qmake -spec macx-g++ c699util.pro # For CentOS, I can just run "qmake"

make release

cat top_of_c699util_dot_py.py c699util.py > temp_c699util.py && mv temp_c699util.py c699util.py

./test_c699util.py

./copy_into_699util.py # Only do this if test_c699util.py passes all tests!

(Actually, these commands are inside of the files osx_release and centos_release, and I just run one of those scripts depending on the OS I'm using.)

copy_into_699util.py is a special script which writes c699util metadata to a file called VersionInfo.txt, and then moves _c699util.so, c699util.py, and VersionInfo into their "release homes" in the 699util repository. (How c699util fits into the 699util release scheme is described later in this article; do not concern yourself with such details at this moment.) VersionInfo.txt is important because it tells software engineers who are debugging c699util (a) what version of c699util is running on a user's computer, and (b) if any uncommitted changes in the working copy made it into the user's version of c699util. Please only release c699util by using copy_into_699util.py.

Example Feature

Let's pretend that we want to add a description() method to c699util's TMPacket class. While we're at it, we'd like to make it so that calling str(pkt_object) in a Python script calls this description() method.

In c699util.hpp, we'd add the following:

/*****************************************************************************

* TMPacket definition

*****************************************************************************/

class TMPacket

{

public:

TMPacket(GseLib::pbytes packetBytesPtr, int pktIndex,

const MomGse::MarkerCache* markerCachePtr,

const MomGse::MomaTimestampResolver& timestampResolver);

std::string description(); // this method was just added

Next, in c699util.cpp, we would define the description() method:

/*****************************************************************************

* TMPacket Implementation

*****************************************************************************/

TMPacket::TMPacket(GseLib::pbytes packetBytesPtr, int pktIndex,

const MomGse::MarkerCache* markerCachePtr,

const MomGse::MomaTimestampResolver& timestampResolver)

: packetBytesPtr_(packetBytesPtr),

pktIndex_(pktIndex),

markerData_(markerCachePtr->getMarker(pktIndex, &hasMarkerData_)),

unixTimestamp_(timestampResolver.getPktUnixTime(pktIndex)),

relativeTimestamp_(timestampResolver.getPktRelativeTime(pktIndex))

{

// no further initialization needed

}

std::string description()

{

return std::string("this is a description of a packet);

}

Then, in c699util.i, we would copy this declaration:

/*****************************************************************************

* TMPacket definition

*****************************************************************************/

class TMPacket

{

public:

TMPacket(GseLib::pbytes packetBytesPtr, int pktIndex,

const MomGse::MarkerCache* markerCachePtr,

const MomGse::MomaTimestampResolver& timestampResolver);

std::string description(); // this method was just added

%pythoncode %{

def __str__(self):

return self.description()

%}

Notice that the change we made to c699util.i is exactly the same as the

change we just made to c699util.hpp, except for the %pythoncode stuff.

(If we didn't want to add a __str__() method to TMPacket, then

c699util.hpp and c699util.i would change in exactly the same way.)

%pythoncode is a SWIG feature that allows us to define Python code that

appears in the Python file that the 'swig' command outputs (which, in

our case, is c699util.py). The __str__() method defined above will

now be a method of the TMPacket class, just as description() is a method

of the TMPacket class. Once c699util is built, calling str(pkt_object)

will invoke the __str__() method defined above, which will then call

description(). %pythoncode is an extremely useful SWIG feature because

it (a) allows us to use magical Python methods (such as generators and

iterators) that are difficult or unrealistic to implement in C++, (b)

allows us to use concise, powerful Python syntax when defining our new

classes rather than writing needlessly verbose C++ code, and (c) allows

us to import and use Python modules at runtime -- even tmread modules!

For example, consider the following uses of %pythoncode in the TMFile

class:

class TMFile

<other TMFile methods go here>{=html}

%pythoncode %{

def test_name(self):

return self.directory_string().split('-', maxsplit=5)[5]

%}

%pythoncode %{

def message_log(self):

from tmread import MessageLog

return MessageLog(self)

%}

Defining test_name() using %pythoncode lets take advantage of Python's

convenient string manipulation features. Defining message_log() using

%pythoncode makes it possible to use tmread's well-tested and powerful

MessageLog class, because we can import and use it at runtime.

Release Process

This process should be followed every time c699util is released. It requires a previously mentioned CentOS virtual machine, which exists solely to build and test c699util for mine699. Passwords for this VM have been distributed to the MOMA team. Copies of the VM exist on Mark Pallone's external hard drive and on the MOMA Data iMac located (as of this writing) in GSFC Building 33 F109A, ECN #2377998, inside of /Users/lab/Documents/c699util-virtual-machines.

c699util Release Process

- Update test_c699util.py so that it thoroughly tests your new feature.

- Commit the code being released.

- On your Mac, run ./osx_release. This will:

- do a clean build of c699util

- run test_c699util.py

- copy the relevant files (including working copy differences) into ~/labcode/699util/c699util, but only if the test succeeded.

- cd into ~/labcode/699util/c699util/osx, and 'svn commit' the newly built files

- On the CentOS build VM, "svn up" the momagse and qt4 directories.

- Run ./centos_release. This will:

- do a clean build of c699util

- run test_c699util.py

- copy the relevant files (including working copy differences) into ~/labcode/699util/c699util, but only if the test succeeded.

- cd into ~/labcode/699util/c699util/centos, and 'svn commit' the newly built files

- Log into mine699. On your own account (e.g., 'mpallone' instead of

the moma user):

- svn update the Python tools

- svn update ~/labcode/qt4

- cd into ~/labcode/qt4/c699util, and run test_c699util.py

- If this works, switch to the 'moma' user and update the Python tools

- Build a new Python tools package, being sure to test that the package works on our OSX VMs.

- Add a new entry to the release page.

- Commit the new DMG to

momagse/Apps/py699. - Document any new c699util features in the c699util user's guide.

- If necessary, update and release

extract_hk_data_mainon mine699 as well. (Strictly speaking this has nothing to do with c699util, but we shouldn't forget about this script when we release updates to c699util.)

It's annoying to run the test in three places, but sometimes bugs exist in one environment but not others.

c699util dependencies for both OS X and CentOS

It's useful to be aware of c699util dependencies when debugging. On OSX, _c699util.so contains the following dependencies:

(py699) gs699-mpallone:c699util mpallone$ otool -L _c699util.so

_c699util.so:

/Library/Frameworks/Python.framework/Versions/3.4/Python (compatibility version 3.4.0, current version 3.4.0)

QtGui.framework/Versions/4/QtGui (compatibility version 4.8.0, current version 4.8.4)

QtCore.framework/Versions/4/QtCore (compatibility version 4.8.0, current version 4.8.4)

QtNetwork.framework/Versions/4/QtNetwork (compatibility version 4.8.0, current version 4.8.4)

/usr/lib/libstdc++.6.dylib (compatibility version 7.0.0, current version 104.1.0)

/usr/lib/libSystem.B.dylib (compatibility version 1.0.0, current version 1226.10.1)

/usr/lib/libgcc_s.1.dylib (compatibility version 1.0.0, current version 915.0.0)

The expected location of these dependencies can be tweaked by using the install_name_tool program. Python 3.4 should be in the specified location if it is installed through the DMG file available on the Python website. The use of Homebrew in this particular case is discouraged. The Qt dependencies should be discoverable once Qt4 is installed. The remaining dependencies were already available on my Mac; I suspect that they're standard on all modern Macs.

Unlike the OSX distribution of c699util, the CentOS distribution has the Python and Qt dependencies statically compiled into the _c699util.so file. The .so file's remaining dependencies are all standard (i.e., they're available by default on CentOS machines such as mine699.gsfc.nasa.gov).

c699util, the 699 developer setup, and the 699util package release scheme

Releasing c699util means committing new versions of

- c699util.py

- _c699util.so

- VersionInfo.txt

into the repos699 SVN server, in the locations

labcode/699util/c699util/osx and labcode/699util/c699util/centos.

The rest of this section describes how c699util fits into the the

699util developer's setup and the 699util package release system.

Review of the 699util Environment

Even for those who are familiar with the 699util installation article, how all the different parts of 699util fit together can still be hard to understand. So, reviewing this should make the rest of this section much easier to understand.

The 699util environment has four major parts:

- 699 Data

- 699 tool configuration/settings

- 699 Code

- Local environment configuration

1. 699 Data. Setting up 699 data simply means checking out folders

such as momadata/etu, momadata/fm, etc. from

momaioc.gsfc.nasa.gov's SVN

repository.

Typically, momadata is checked out into the home directory, but this is

not required.

2. 699 tool configuration/settings. Items in this category mainly tell 699 software where data is, and how to interpret that data. This category includes:

- checking out momagse

- using MOMA Data View to initialize the ~/.699config.INI file.

Simply open up a TID for each model that you have checked out in

your

momadataworking copy. - (If you're doing a developer install, you will also have to checkout config699.)

3. 699 code. Developers can check out 699util from SVN. Users can find MOMA script DMGs here.

4. Local environment configuration. This tends to be the most opaque. It consists of the following:

- Installing Python 3.4.3 from the Python website, and updating your .bashrc, .bash_profile, or .profile file so that the newly installed version of Python can be found via the PATH environment variable.

-

setting up a virtual environment using the program

virtualenv.

A virtual environment can be thought of as a folder containing

libraries and executable programs, as well as the environment

variables that reference those libraries and executables. In the

699util world, virtualenv is used to create either

~/py699or~/py699-moma. Python 3.4.3 is copied into this directory and, in the case of the package install, 699util scripts are also copied. Typically, the last line of a user's~/.bashrcfile "activates" the virtual environment, which essentially just puts the virtual environment's binary folder (e.g.,~/py699/bin) at the front of the PATH variable. Then, when a scripts starts with#!/usr/bin/env python3, the first instance of the python3 executable found by the/usr/bin/envprogram will be the one inside of the~/py699directory. - The location of the 699util scripts, such as tmplot.py. In the

package install meant for users (i.e., non-developers), these

scripts are simply copied into the virtual environment folder

~/py699/bin. For developers who already have these scripts checked out into a working copy, the location of those scripts is added to the PATH variable as follows:- the developer opens up a terminal

- the terminal sources the developer's

~/.bashrcfile - the

~/.bashrcfile sources the~/config699/bash_config.shfile, which adds the script directories to the PATH variable.

- The location of 699util libraries. This is very similar to how

scripts are handled. For non-developers, the 699util Python library

is in the virtual environment's library folder. For developers, the

~/config699/bash_config.shdirectory adds the libraries to the PYTHONPATH environment variable, which Python uses when looking for modules that need to be imported.

Developer Setup

As mentioned above, the developer install sources

~/config699/bash_config.sh, which sets PATH and PYTHONPATH environment

variables. bash_config.sh determines the version of c699util that's

correct for the user's operating system, and adds that directory to the

PYTHONPATH variable. More explicitly, if c699util exists in this

location:

~/labcode/699util/c699util/osx/c699util.py

~/labcode/699util/c699util/osx/_c699util.so

then the directory ${HOME}/labcode/699util/c699util/osx will be added

to the user's PYTHONPATH, so that the import c699util code inside of

a Python script can know to look in this directory.

As mentioned in a previous section, _c699util.so has some Qt4

dependencies. When `import c699util` is executed, some code at the top

of c699util.py will determine if _c699util.so's dependencies are

unresolved, and if so, change the dependencies to point to the correct

location on the user's computer.

699util Package Release

Non-developers (i.e., users) install 699util by mounting a 699util .dmg

file and running the install699util.sh script. The package release

scheme does not use the aforementioned ~/config699/bash_config.sh

script. The .dmg file contains the following in its root directory:

- _c699util.so

- 699util.py

- Qt4 dependencies

install699util.sh simply copies these files into the virtual

environment folder ~/py699/lib, and then tweaks the user's .bashrc

file so that ~/py699/lib is always in the users' PYTHONPATH whenever

a new terminal is opened.

c699util User's Guide

Similar to Introduction to the 699 Python Library, this article is a high-level description of c699util, intended for those who are only using it to write Python scripts. I do not describe low level c699util details here. For that, see the c699util developer's guide.

c699util is a Python module which provides housekeeping and science data extraction routines for MOMA telemetry files. Its interface is very similar to 699util's tmread module:

#!/usr/bin/env python3

#==============================================================================

# Packet data extraction through 699util's tmread module:

#==============================================================================

import tmread

tmfile = tmread.get_tmfile('/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3')

wrp_current_datapoints = []

wrp_current_hkid = 800

pkt_17_count = 0

for pkt in tmfile(17):

pkt_17_count += 1

wrp_current_datapoints.extend(pkt[wrp_current_hkid])

# Print out the data:

print("pkt_17_count:", pkt_17_count)

print()

print("WRP current sample timestamp, WRP current sample science value:")

for timestamp, value in wrp_current_datapoints:

print(timestamp, 't', value)

#==============================================================================

# Packet data extraction through c699util:

#==============================================================================

import c699util

c_tmfile = c699util.construct_tmfile('/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3')

wrp_current_datapoints = []

wrp_current_hkid = 800

pkt_17_count = 0

for pkt in c_tmfile(17):

pkt_17_count += 1

wrp_current_datapoints.extend(pkt[wrp_current_hkid])

# Print out the data:

print("pkt_17_count:", pkt_17_count)

print()

print("WRP current sample timestamp, WRP current sample science value:")

#for timestamp, value in wrp_current_datapoints:

# print(timestamp, 't', value)

# Note the change here!

for datapoint in wrp_current_datapoints:

print(datapoint.relative_timestamp, 't', datapoint.value)

As this example illustrates, some minor interface differences exist between c699util and tmread, which are documented at the end of this article.

You are highly encouraged to follow the following convention: if you use c699util in a Python script, please prefix it with "c_". For example, when I ported ioncount.py over to c699util, I made a copy of ioncount.py, called it c_ioncount.py, and then replaced tmread code with similar c699util code. This way, both scripts are available to run (a nice option to have if we encounter bugs), and we always know which underlying library we're using.

If we already have tmread, why bother with c699util?

Well, for one, c699util is about an order of magnitude faster than tmread (and sometimes more -- c_ioncount.py was 39x faster than ioncount.py when extracting selected ion counts).

More importantly, much of tmread is deprecated for MOMA. Any feature that tmread shares with c699util is no longer maintained. Instead of maintaining telemetry extraction libraries in C++, and then duplicating the exact same functionality in Python, we've decided to simply add a Python interface to the C++ code. This dramatically simplifies feature implementation, eliminates consistency issues between various tools, and speeds up software development and program run time, but it also means that tmread won't output data as accurately as c699util, and also won't have as many features.

c699util Flaw -- only one TMFile at a time

Because the C++ code around which c699util wraps frequently uses singletons, c699util can only operate on one TMFile at a time. Calling c699util.construct_tmfile() will invalidate any previously existing c699util.TMFile objects.

We rarely ever (if at all) need this capability, but when the C++ and c699util code is ported over to future missions, this design should be reconsidered.

Obtaining c699util

If you do not have the 699 Python tools installed on your computer, then the developer instructions in Python_Tool_Installation should work, although it's probably best to just ask a MOMA software developer to do it for you. Be sure to install Python 3.4.3 from the Python website.

If you already have 699 Python tools installed, you will probably also have to ask a MOMA software developer to setup c699util. Most likely, Python 3.4.3 will need to be installed directly from the website (sorry, Homebrew aficionados), and your virtual environment will need to be rebuilt so that it uses Python 3.4.3. However, if you happen to already be using Python 3.4.3, then SSH tunneling into repos699 and then updating ~/config699 and ~/labcode/699util should be all that you need to do to start using c699util.

TMFile

c699util provides a TMFile class which is very similar to tmread's TMFile class. c699util.TMFile objects can be obtained as follows:

import c699util

c_tmfile = c699util.get_tmfile('/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3')

c699util.get_tmfile() is a thin wrapper around tmread.get_tmfile(), so it does all of the telemetry-file-finding-magic that tmread.get_tmfile() does:

import c699util

c_tmfile = c699util.get_tmfile() # grab the tm.mom file in the cwd

c_tmfile = c699util.get_tmfile(12345) # Grab TID 12345

c_tmfile = c699util.get_tmfile("/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC") # Grab the tm.mom file in the specified directory

It would be preferable to not use the deprecated tmread module, but it seems unlikely to me that I'll ever get around to implementing get_tmfile()'s magic inside of c699util, at least on MOMA. Also, this tactic allows us to keep using the extremely useful TMArgumentParser class.

If tmread is not available, c699util.construct_tmfile() can be used:

import c699util

c_tmfile = c699util.construct_tmfile('/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3')

Also, note the convention of using c_tmfile. I prefer to use 'tmfile' for tmread.TMFile objects, and 'c_tmfile' for c699util.TMFile objects, so that I can tell at a glance what kind of object I'm using. I strongly recommend that other script writers do the same.

Other useful methods:

#!/usr/bin/env python3

import tmread, c699util

tmfile = tmread.get_tmfile(30410) # Give it a TID and let it find the file for us

c_tmfile = c699util.construct_tmfile(tmfile.filename)

# (alternatively, we could just do c_tmfile = c699util.get_tmfile(30410)

print("Absolute filename:", c_tmfile.filename())

print("Absolute filename:", str(c_tmfile))

# Absolute filename: /Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3

print("Absolute directory:", c_tmfile.absolute_directory())

# Absolute directory: /Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC

# Parsed from the first GSE packet in the tm.mom file:

print("TID Directory name:", c_tmfile.directory_string())

# TID Directory name: 2016-01-15-14.06.47-30410-MSFUNC

print("Mass calibration file used:", c_tmfile.get_mcal_filename())

# Mass calibration file used: 2016-01-17-23.48.39-30410.mcal

print("File size in bytes:", c_tmfile.file_size())

# File size in bytes: 48384623

print("TID:", c_tmfile.tid())

# TID: 30410

print("t0 in Unix time:", c_tmfile.t0_in_unix_time())

# t0 in Unix time: 1452884878.3959727

print("Number of packets:", c_tmfile.length())

print("Number of packets:", len(c_tmfile))

# Number of packets: 70232

print("TMFile actually exists on the filesystem:", c_tmfile.exists())

print("TMFile actually exists on the filesystem:", bool(c_tmfile))

# TMFile actually exists on the filesystem: True

pkt_at_index_10 = c_tmfile.get_pkt(10)

pkt_at_index_10 = c_tmfile[10]

# We can iterate through c_tmfile and get packet objects:

pkt_count = 0

for pkt in c_tmfile:

pkt_count += 1

# The old tm_filter routines also work for c699util:

pkt_17_count = 0

for pkt in c_tmfile(17):

pkt_17_count += 1

pkt_count = 0

for pkt in c_tmfile(type_filter=17, # Only yield packets of type 17

marker_filter=2500, # Only yield packets at marker 2500

start_time=1000, # Relative time = 1000 seconds

stop_time=2000): # Relative time = 2000 seconds

pkt_count += 1

# If the directory name is

# '2016-01-15-14.06.47-30410-MSFUNC'

# then the test name is

# 'MSFUNC'

print("Test name:", c_tmfile.test_name())

# tmread.MessageLog is not deprecated, and is fully compatible with c699util:

msg_log = c_tmfile.message_log()

meta_markers = c_tmfile.get_meta_markers()

for meta_marker in meta_markers:

print(meta_marker.startRelativeTimeInSecs)

print(meta_marker.endRelativeTimeInSecs)

print(meta_marker.metaMarkerId)

print(meta_marker.index)

print(meta_marker.msg)

print("model:", c_tmfile.model())

# model: fm

print("FSW timestamp at of t0 packet:", c_tmfile.t0_in_sclk())

# FSW timestamp at of t0 packet: 19351744

tmread.extract_tmfields() is compatible with c699util.TMFile objects:

#!/usr/bin/env python3

import tmread, c699util

tmfile = tmread.get_tmfile(30410) # Give it a TID and let it find the file for us

c_tmfile = c699util.construct_tmfile(tmfile.filename)

AUX_AMP_DAC_SET_HKID = 61

RF_AMP_MON_HKID = 62

fields = tmread.extract_tmfields(c_tmfile, [AUX_AMP_DAC_SET_HKID, RF_AMP_MON_HKID])

# Note that we still get c699util.TMDatapoint objects here, which are different

# from 699util's tmread datapoints!

sum_ = 0

count = 0

for datapoint in fields[RF_AMP_MON_HKID]:

relative_timestamp = datapoint.relative_timestamp

unix_timestamp = datapoint.unix_timestamp

raw_timestamp = datapoint.fsw_timestamp

sum_ += datapoint.value

count += 1

if count != 0:

print("RF Amp mon avg:", sum_ / count)

## TMPacket

Just like TMFile, c699util provides TMPacket objects which are very

similar to tmread.TMPacket objects:

#!/usr/bin/env python3

import tmread, c699util

tmfile = tmread.get_tmfile(30410) # Give it a TID and let it find the file for us

c_tmfile = c699util.construct_tmfile(tmfile.filename)

gse_pkt = c_tmfile[0] # Pkt type 8, GSE generated

pkt_22 = c_tmfile[65] # Pkt type 22

msg_log_pkt = c_tmfile[85] # Pkt type 8

print("Unmasked type of gse_pkt:", gse_pkt.full_type())

# Unmasked type of gse_pkt: 136

print("Unmasked type of pkt_22:", pkt_22.full_type())

# Unmasked type of pkt_22: 22

# This is analagous to tmread.TMPacket.type

print("gse_pkt with gse-generated bit masked out:", gse_pkt.get_type())

# gse_pkt with gse-generated bit masked out: 8

print("pkt_22 length, including header and checksum:", pkt_22.total_length())

# pkt_22 length, including header and checksum: 224

print("pkt_22 length, just the data:", pkt_22.length())

# pkt_22 length, just the data: 212

print("pkt_22 checksum:", pkt_22.checksum())

print("pkt_22 checksum:", hash(pkt_22))

# pkt_22 checksum: 1379474455

print("Is pkt_22 gse created?", pkt_22.gse_created())

# Is pkt_22 gse created? False

print("Is gse_pkt gse created?", gse_pkt.gse_created())

# Is gse_pkt gse created? True

print("pkt_22 sequence number:", pkt_22.sequence())

# pkt_22 sequence number: 146

# Yes, I'm aware that 'sclk' is a misnomer

print("pkt_22 FSW timestamp:", pkt_22.sclk())

# pkt_22 FSW timestamp: 19540225

print("pkt_22 FSW timestamp, in seconds:", pkt_22.sclk_in_seconds())

# pkt_22 FSW timestamp, in seconds: 1954.0225

print("pkt_22 index:", pkt_22.index())

# pkt_22 index: 65

print("pkt_22 marker ID:", pkt_22.marker_id())

# pkt_22 marker ID: 0

# Packets before the first marker packet have a marker index of 0

print("pkt_22 marker index:", pkt_22.xina_marker_index())

# pkt_22 marker index: 0

print("pkt_22 unix timestamp, in seconds:", pkt_22.unix_timestamp())

# pkt_22 unix timestamp, in seconds: 1452884897.2440727

print("pkt_22 relative timestamp, in seconds:", pkt_22.relative_timestamp())

# pkt_22 relative timestamp, in seconds: 18.848099946975708

print("pkt_22 marker text:", pkt_22.marker_text())

# pkt_22 marker text: <no marker text, but you get the idea>

###########################################################

#

# (this doesn't actually work for TID 30410, but I think you get the picture)

#

# HKID 100 = phase cycle readback HKID

phase_cycle_readback_raw_data = pkt_22.all_raw_data(100)

phase_cycle_readback_eng_data = pkt_22.all_eng_data(100)

phase_cycle_readback_sci_data = pkt_22.all_data(100)

phase_cycle_readback_sci_data = pkt[100]

datapoint = phase_cycle_readback_sci_data[0]

print("Datapoint unix timestamp, in seconds:", datapoint.unix_timestamp)

print("Datapoint relative timestamp, in seconds:", datapoint.relative_timestamp)

print("Datapoint value:", datapoint.value)

first_phase_cycle_readback_science_sample = pkt_22.get_first_value(100)

#

#

###########################################################

print("Does gse_pkt have an earlier timestamp than pkt_22?", gse_pkt < pkt_22)

# Does gse_pkt have an earlier timestamp than pkt_22? True

print("Raw message log:", msg_log_pkt.raw_message())

print("Sanitized message log:", msg_log_pkt.message())

# (output omitted for brevity)

print("raw bytes:", pkt_22.raw_packet())

# b'x16x92x00xd4x01*)x01xfaxf3 x02x00...(etc).

meta_markers = pkt_22.get_meta_markers()

for meta_marker in meta_markers:

print(meta_marker.startRelativeTimeInSecs)

print(meta_marker.endRelativeTimeInSecs)

print(meta_marker.metaMarkerId)

print(meta_marker.index)

print(meta_marker.msg)

Extracting Science Data

c699util has a get_scans() function, just like tmread.momascience:

#!/usr/bin/env python3

import c699util

c_tmfile = c699util.get_tmfile(30410)

scan_count = 0

for scan in c699util.get_scans(c_tmfile):

scan_count += 1

print("Number of scans:", scan_count)

The usual packet type, marker filtering, start time, etc. filtering options are available.

Unlike tmread, c699util provides a wrapper around MOMA Data View's ScienceDataCache class:

#!/usr/bin/env python3

import c699util

# Not needed

# c_tmfile = c699util.construct_tmfile(tmfile.filename)

# Notice that this factory function takes the absolute filename, just like

# construct_tmfile

# science_cache = c699util.construct_science_data_cache('/Users/mpallone/momadata/fm/2016-01-15-14.06.47-30410-MSFUNC/tm.mom.m3.s3')

science_cache = c699util.get_science_data_cache(30410)

c699util.get_science_data_cache() is analagous to c699util.get_tmfile(), so it can be passed no arguments if the programmer wants to search the cwd, or just the TID to search for, or the absolute path of the tm.mom file, etc.

The science cache can be iterated through to get scan objects (just c699util.get_scans()), or it can be indexed, as demonstrated below.

#!/usr/bin/env python3

import c699util

science_cache = c699util.get_science_data_cache(30410)

last_scan = science_cache[-1]

print("Last scan data:")

print("Unix timestamp, in seconds:", last_scan.unix_timestamp())

# Unix timestamp, in seconds: 1452890221.7956727

print("Relative timestamp, in seconds:", last_scan.relative_timestamp())

# Relative timestamp, in seconds: 5343.399699926376

print("marker ID:", last_scan.marker_id())

# marker ID: 2050

print("marker Index:", last_scan.xina_marker_index())

# marker Index: 136

print("marker text:", last_scan.marker_text())

# marker text: SCN 602 START Emission Current Source B

list_of_ion_counts = last_scan.counts()

print("total ion count:", sum(list_of_ion_counts))

print("total ion count:", last_scan.total_ion_count())

# total ion count: 40463

print("Highest count in the last_scan:", last_scan.highest_count())

# Highest count in the last_scan: 708

print("Bin number containing the highest count:", last_scan.highest_count_bin_num())

# Bin number containing the highest count: 1710

print("Lowest count:", last_scan.lowest_count())

# Lowest count: 0

# There should be one entry for every 100 bins

rf_status_entry_list = last_scan.rf_status_entries()

rf_entry = rf_status_entry_list[0]

print("First RF Entry start bin:", rf_entry.start_bin)

# First RF Entry start bin: 0

print("First RF Entry RF Amp V:", rf_entry.rf_amp_v)

# First RF Entry RF Amp V: 66.67613871395588

print("First RF Entry RF Amp DAC V:", rf_entry.rf_amp_dac_v)

# First RF Entry RF Amp DAC V: 70.00512770935893

print("First RF Entry Aux Amp DAC V:", rf_entry.aux_amp_dac_v)

# First RF Entry Aux Amp DAC V: 1.0998702980577946

print("RF Amp Start:", last_scan.rf_amp_start())

# RF Amp Start: 66.67613871395588

print("RF Amp End:", last_scan.rf_amp_end())

# RF Amp End: 993.6579284607433

print("RF Amp DAC Start:", last_scan.rf_amp_dac_start())

# RF Amp DAC Start: 70.00512770935893

print("RF Amp DAC End:", last_scan.rf_amp_dac_end())

# RF Amp DAC End: 996.3826513544906

print("Aux Amp DAC Start:", last_scan.aux_amp_dac_start())

# Aux Amp DAC Start: 1.0998702980577946

print("Total scan time, in seconds:", last_scan.total_scan_time())

# Total scan time, in seconds: 0.05000000074505805

print("Peak counts per second:", last_scan.peak_counts_per_sec())

# Peak counts per second: 70799998.9449978

print("Pkt source type (26 or 27):", last_scan.src_pkt_type())

# Pkt source type (26 or 27): 27

print("Number of scans that went into this scan (typically 10 for sum packets):")

print(last_scan.num_scans())

# Number of scans that went into this scan (typically 10 for sum packets):

# 10

print("Does this scan have a scan status packet?", last_scan.has_scan_status())

# Does this scan have a scan status packet? True

print("Number of scan status packets:", last_scan.num_scan_status_pkts())

# Number of scan status packets: 10

print("Does this scan have a preceding SEB HK packet?", last_scan.has_seb_hk_pkt())

# Does this scan have a preceding SEB HK packet? True

print("Is this scan a dark count scan?", last_scan.is_dark_count_scan())

# Is this scan a dark count scan? False

print("Sum of the ion counts for bins 500-600:")

print(last_scan.selected_ion_count_sum_by_bin(500,600))

# Sum of the ion counts for bins 500-600:

# 3981

print("Sum of the ion counts between masses 138-140:")

print(last_scan.selected_ion_count_sum_by_mass(138,140))

# Sum of the ion counts between masses 138-140:

# 78

print("Maximum ion count between bins 500-600:")

print(last_scan.selected_ion_count_max_by_bin(500,600))

# Maximum ion count between bins 500-600:

# 537

print("Maximum ion count between masses 138-140:")

print(last_scan.selected_ion_count_max_by_mass(138,140))

# Maximum ion count between masses 138-140:

# 21

print("seb_bin_time_readback:", last_scan.seb_bin_time_readback())

# seb_bin_time_readback: 10.000000149011612

print("seb_bin_time_readback_in_seconds:", last_scan.seb_bin_time_readback_in_seconds())

# seb_bin_time_readback_in_seconds: 1.0000000149011612e-05

print("seb_special_scan_id:", last_scan.seb_special_scan_id())

# seb_special_scan_id: 602

print("seb_raw_scan_value:", last_scan.seb_raw_scan_value())

# seb_raw_scan_value: 127

print("seb_scan_value:", last_scan.seb_scan_value())

# seb_scan_value: 49.80392238497734

print("seb_rf_freq_hz:", last_scan.seb_rf_freq_hz())

# seb_rf_freq_hz: 988400.0

print("seb_last_rf_amp_mon:", last_scan.seb_last_rf_amp_mon())

# seb_last_rf_amp_mon: 993.6579284607433

print("seb_rf_amp_dac_set:", last_scan.seb_rf_amp_dac_set())

# seb_rf_amp_dac_set: 996.3826513544906

print("seb_aux_amp_dac_set:", last_scan.seb_aux_amp_dac_set())

# seb_aux_amp_dac_set: 7.297474631876097

print("seb_counter_sel:", last_scan.seb_counter_sel())

# seb_counter_sel: 1

print("seb_bin_count_set:", last_scan.seb_bin_count_set())

# seb_bin_count_set: 5000

print("seb_bin_count_reg:", last_scan.seb_bin_count_reg())

# seb_bin_count_reg: 20000

print("seb_phase_cycle_rdbk:", last_scan.seb_phase_cycle_rdbk())

# seb_phase_cycle_rdbk: 3

print("seb_rf_step_set:", last_scan.seb_rf_step_set())

# seb_rf_step_set: 0.1870379802200142

print("seb_rf_step_reg:", last_scan.seb_rf_step_reg())

# seb_rf_step_reg: -0.038366765173336245

print("seb_aux_step_set:", last_scan.seb_aux_step_set())

# seb_aux_step_set: 0.0012397955291065842

print("seb_aux_step_reg:", last_scan.seb_aux_step_reg())

# seb_aux_step_reg: 0.0006103608758678569

print("seb_ionization_time_in_milliseconds:", last_scan.seb_ionization_time_in_milliseconds())

# seb_ionization_time_in_milliseconds: 4.999999888241291

print("seb_scan_em1:", last_scan.seb_scan_em1())

# seb_scan_em1: -2069.6365661583154

print("seb_scan_em1_raw:", last_scan.seb_scan_em1_raw())

# seb_scan_em1_raw: 197

print("seb_scan_em2:", last_scan.seb_scan_em2())

# seb_scan_em2: 994.4167251074475

print("seb_scan_em2_raw:", last_scan.seb_scan_em2_raw())

# seb_scan_em2_raw: 0

print("seb_src_a_foc_a_dac_set:", last_scan.seb_src_a_foc_a_dac_set())

# seb_src_a_foc_a_dac_set: -39.6078431372549

print("seb_src_b_foc_a_dac_set:", last_scan.seb_src_b_foc_a_dac_set())

# seb_src_b_foc_a_dac_set: -39.6078431372549

print("seb_src_a_foc_a_dac_reg:", last_scan.seb_src_a_foc_a_dac_reg())

# seb_src_a_foc_a_dac_reg: -67.45098039215686

print("seb_src_b_foc_a_dac_reg:", last_scan.seb_src_b_foc_a_dac_reg())

# seb_src_b_foc_a_dac_reg: -67.45098039215686

print("meta markers:", last_scan.get_meta_markers())

# output omitted due to laziness, it's the same interface that's documented in the TMFile and TMPacket sections

print("m/z values:", last_scan.m_over_z_values())

# (long tuple of doubles)

print("list of TICs:", last_scan.total_ion_counts())

# list of TICs: (3680, 3882, 4092, 4100, 4147, 4119, 4103, 4021, 4214, 4105)

print("cTIC:", last_scan.corrected_total_ion_count())

# cTIC: 1715842

print("cTIC Factor:", last_scan.corrected_total_ion_count_factor())

# cTIC Factor: 42.40522135001076

print("cTIC Factors:", last_scan.corrected_total_ion_count_factors())

# cTIC Factors: ()

print("Packet version:", last_scan.science_data_pkt_version())

# Packet version: 1

print("seb_is_fil_a_on:", last_scan.seb_is_fil_a_on())

# seb_is_fil_a_on: False

print("seb_is_fil_b_on:", last_scan.seb_is_fil_b_on())

# seb_is_fil_b_on: True

print("Scan mode:", last_scan.scan_mode_as_string())

# Scan mode: EI

print("SEB sequence packet indexes:", last_scan.seb_sequence_pkt_indexes())

# SEB sequence packet indexes: (68463, 68464, 68465)

print("EM on times (ms):", last_scan.em_on_times_in_ms())

# EM on times (ms): (600.1000052131712, 0.0)

Interface Differences Between tmread and c699util

This section is intended for those who have used tmread on SAM, LADEE, or MAVEN. My hope is that such users can use c699util just as they use tmread, using this section as a cheatsheet as needed.

#!/usr/bin/env python3

import tmread, c699util

tmfile = tmread.get_tmfile(30410) # Give it a TID and let it find the file for us

c_tmfile = c699util.construct_tmfile(tmfile.filename)

AUX_AMP_DAC_SET_HKID = 61

RF_AMP_MON_HKID = 62

#------------------------------------------------------------------------------

# TMFile interface differences

#------------------------------------------------------------------------------

# Getting a TMFile:

tmfile = tmread.get_tmfile(30410) # Can be passed TID or absolute filename

c_tmfile = c699util.construct_tmfile(tmfile.filename) # requires absolute filename

filename = tmfile.filename

filename = c_tmfile.filename()

dir_string = tmfile.directory_string

dir_string = c_tmfile.directory_string()

t0_in_unix_time = tmfile.start_time().unix # Returns TMTimestamp object

t0_in_unix_time = c_tmfile.t0_in_unix_time() # Returns a float

msg_log = tmfile.message_log

msg_log = c_tmfile.message_log()

#------------------------------------------------------------------------------

# TMPacket interface differences

#------------------------------------------------------------------------------

pkt = tmfile[-1]

c_pkt = c_tmfile[-1]

# Packet type with GSE bit masked out:

pkt_type = pkt.type

pkt_type = c_pkt.get_type()

# Full packet type:

full_pkt_type = pkt.full_type

full_pkt_type = c_pkt.full_type()

total_length = pkt.total_length

total_length = c_pkt.total_length()

# Doesn't included the 8 byte header and 4 byte checksum, unlike total_length

data_length = pkt.length

data_length = c_pkt.length()

checksum = pkt.checksum

checksum = c_pkt.checksum()

gse_created = pkt.gse_created

gse_created = c_pkt.gse_created()

sequence_number = pkt.sequence

sequence_number = c_pkt.sequence()

fsw_timestamp = pkt.raw_time

fsw_timestamp = c_pkt.sclk()

unix_timestamp = pkt.timestamp.unix # TMTimestamp object

unix_timestamp = c_pkt.unix_timestamp() # float

relative_timestamp = pkt.timestamp.relative # TMTimestamp object

relative_timestamp = c_pkt.relative_timestamp() # float

marker_text = pkt.marker_text

marker_text = c_pkt.marker_text()

marker_id = pkt.mkid

marker_id = c_pkt.marker_id()

msg = pkt.message

msg = c_pkt.message()

#

# Housekeeping data interface:

#